SurfaceConstellations: A Modular Hardware Platform for Ad-Hoc Reconfigurable Cross-Device Workspaces

UNIVERSITY COLLEGE LONDON, 2018

We contribute SurfaceConstellations, a modular hardware platform for linking multiple mobile devices to easily create novel cross-device workspace environments. Our platform combines the advantages of multi-monitor workspaces and multi-surface environments with the flexibility and extensibility of more recent cross-device setups. The SurfaceConstellations platform includes a comprehensive library of 3D-printed link modules to connect and arrange tablets into new workspaces, several strategies for designing setups, and a visual configuration tool for automatically generating link modules. We contribute a detailed design space of cross-device workspaces, a technique for capacitive links between tablets for automatic recognition of connected devices, designs of flexible joint connections, detailed explanations of the physical design of 3D printed brackets and support structures, and the design of a web-based tool for creating new SurfaceConstellation setups.

Nicolai Marquardt, Frederik Brudy, Can Liu, Benedikt Bengler, Christian Holz (2018). SurfaceConstellations: A Modular Hardware Platform for Ad-Hoc Reconfigurable Cross-Device Workspaces. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA.

Investigating the Role of an Overview Device in Multi-Device Collaboration

UNIVERSITY COLLEGE LONDON, 2018

The availability of mobile device ecologies enables new types of ad-hoc co-located decision-making and sensemaking practices in which people find, collect, discuss, and share information. However, little is known about what kind of device configurations are suitable for these types of tasks. This paper contributes new insights into how people use configurations of devices for one representative example task: collaborative co-located trip-planning. We present an empirical study that explores and compares three strategies to use multiple devices: no-overview, overview on own device, and a separate overview device. The results show that the overview facilitated decision- and sensemaking during a collaborative trip-planning task by aiding groups to iterate their itinerary, organize locations and timings efficiently, and discover new insights. Groups shared and discussed more opinions, resulting in more democratic decision-making. Groups provided with a separate overview device engaged more frequently and spent more time in closely-coupled collaboration.

Frederik Brudy, Joshua Kevin Budiman, Steven Houben, Nicolai Marquardt (2018). Investigating Practices When Using an Overview Device in Collaborative Multi-Surface Trip-Planning. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA.

Evaluation Strategies for HCI Toolkit Research

UNIVERSITY COLLEGE LONDON, 2018

Toolkit research plays an important role in the field of HCI, as it can heavily influence both the design and implementation of interactive systems. For publication, the HCI community typically expects toolkit research to include an evaluation component. The problem is that toolkit evaluation is challenging, as it is often unclear what evaluating a toolkit means and what methods are appropriate. To address this problem, we analyzed 68 published toolkit papers. From our analysis, we provide an overview of, reflection on, and discussion of evaluation methods for toolkit contributions. We identify and discuss the value of four toolkit evaluation strategies, including the associated techniques that each employs. We offer a categorization of evaluation strategies for toolkit researchers, along with a discussion of the value, potential limitations, and trade-offs associated with each strategy.

David Ledo, Steven Houben, Jo Vermeulen, Nicolai Marquardt, Lora Oehlberg, Saul Greenberg (2018). Evaluation Strategies for HCI Toolkit Research. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA.

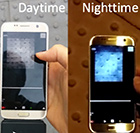

Deep Thermal Imaging: Proximate Material Type Recognition in the Wild through Deep Learning of Spatial Surface Temperature Patterns

UNIVERSITY COLLEGE LONDON, 2018

We introduce Deep Thermal Imaging, a new approach for close-range automatic recognition of materials to enhance the understanding of people and ubiquitous technologies of their proximal environment. Our approach uses a low-cost mobile thermal camera integrated into a smartphone to capture thermal textures. A deep neural network classifies these textures into material types. This approach works effectively without the need for ambient light sources or direct contact with materials. Furthermore, the use of a deep learning network removes the need to handcraft the set of features for different materials. We evaluated the performance of the system by training it to recognize 32 material types in both indoor and outdoor environments. Our approach produced recognition accuracies above 98% in 14,860 images of 15 indoor materials and above 89% in 26,584 images of 17 outdoor materials. We conclude by discussing its potentials for real-time use in HCI applications and future directions.

Youngjun Cho, Nadia Bianchi-Berthouze, Nicolai Marquardt, Simon J. Julier (2018). Deep Thermal Imaging: Proximate Material Type Recognition in the Wild through Deep Learning of Spatial Surface Temperature Patterns. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA.

Inclusive Computing in Special Needs Classrooms: Designing for All

UNIVERSITY COLLEGE LONDON, 2018

With a growing call for an increased emphasis on computing in school curricula, there is a need to make computing accessible to a diversity of learners. One potential approach is to extend the use of physical toolkits, which have been found to encourage collaboration, sustained engagement and effective learning in classrooms in general. However, little is known as to whether and how these benefits can be leveraged in special needs schools, where learners have a spectrum of distinct cognitive and social needs. Here, we investigate how introducing a physical toolkit can support learning about computing concepts for special education needs (SEN) students in their classroom. By tracing how the students' interactions - both with the physical toolkit and with each other - unfolded over time, we demonstrate how the design of both the form factor and the learning tasks embedded in a physical toolkit contribute to collaboration, comprehension and engagement when learning in mixed SEN classrooms.

Zuzanna Lechelt, Yvonne Rogers, Nicola Yuill, Lena Nagl, Grazia Ragone, Nicolai Marquardt (2018). Inclusive Computing in Special Needs Classrooms: Designing for All. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA.

Gesture Elicitation Study on How to Opt-in & Opt-out from Interactions with Public Displays

UNIVERSITY COLLEGE LONDON, 2017

Public interactive displays with gesture-recognizing camer- as enable new forms of interactions. However, often such systems do not yet allow passers-by a choice to engage voluntarily or disengage from an interaction. To address this issue, this paper explores how people could use differ- ent kinds of gestures or voice commands to explicitly opt-in or opt-out of interactions with public installations. We re- port the results of a gesture elicitation study with 16 partici- pants, generating gestures within five gesture-types for both a commercial and entertainment scenario. We present a categorization and themes of the 430 proposed gestures, and agreement scores showing higher consensus for torso gestures and for opting-out with face/head. Furthermore, patterns indicate that participants often chose non-verbal representations of opposing pairs such as 'close and open' when proposing gestures. Quantitative results showed over- all preference for hand and arm gestures, and generally a higher acceptance for gestural interaction in the entertain- ment setting.

Isabel Benavente Rodriguez, Nicolai Marquardt (2017). Gesture Elicitation Study on How to Opt-in & Opt-out from Interactions with Public Displays. In Proceedings of the 2017 ACM on Interactive Surfaces and Spaces (ISS '17). ACM.

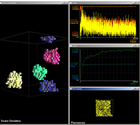

EagleSense: Tracking People and Devices in Interactive Spaces using Real-Time Top-View Depth-Sensing

UNIVERSITY COLLEGE LONDON, 2017

Real-time tracking of people's location, orientation and activities is increasingly important for designing novel ubiquitous computing applications. Top-view camera-based tracking avoids occlusion when tracking people while collaborating, but often requires complex tracking systems and advanced computer vision algorithms. To facilitate the prototyping of ubiquitous computing applications for interactive spaces, we developed EagleSense, a real-time human posture and activity recognition system with a single top-view depth-sensing camera. We contribute our novel algorithm and processing pipeline, including details for calculating silhouette-extremities features and applying gradient tree boosting classifiers for activity recognition optimized for top-view depth sensing. EagleSense provides easy access to the real-time tracking data and includes tools for facilitating the integration into custom applications. We report the results of a technical evaluation with 12 participants and demonstrate the capabilities of EagleSense with application case studies.

Chi-Jui Wu, Steven Houben, and Nicolai Marquardt (2017). EagleSense: Tracking People and Devices in Interactive Spaces using Real-Time Top-View Depth-Sensing. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI '17). ACM, New York, NY, USA, 3929-3942.

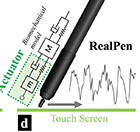

RealPen: Providing Realism in Handwriting Tasks on Touch Surfaces using Auditory-Tactile Feedback

UNIVERSITY COLLEGE LONDON, 2016

We present RealPen, an augmented stylus for capacitive tablet screens that recreates the physical sensation of writing on paper with a pencil, ball-point pen or marker pen. The aim is to create a more engaging experience when writing on touch surfaces, such as screens of tablet computers. This is achieved by regenerating the friction-induced oscillation and sound of a real writing tool in contact with paper. To generate realistic tactile feedback, our algorithm analyzes the frequency spectrum of the friction oscillation generated when writing with traditional tools, extracts principal frequencies, and uses the actuator's frequency response profile for an adjustment weighting function. We enhance the realism by providing the sound feedback aligned with the writing pressure and speed. Furthermore, we investigated the effects of superposition and fluctuation of several frequencies on human tactile perception, evaluated the performance of RealPen, and characterized users' perception and preference of each feedback type.

Cho, Y., Bianchi, A., Marquardt, N., Bianchi-Berthouze, N. 2016. RealPen: Providing Realism in Handwriting Tasks on Touch Surfaces using Auditory-Tactile Feedback. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology (UIST '16). ACM, New York, NY, USA, 195-205.

Physikit: Data Engagement Through Physical Ambient Visualizations in the Home

UNIVERSITY COLLEGE LONDON, 2016

Internet of things (IoT) devices and sensor kits have the potential to democratize the access, use, and understanding of data. Despite the increased availability of low cost sensors, most of the produced data is 'black box' in nature: users often do not know how to access or interpret data. We propose a ‘human-data design' approach in which end-users are given tools to create, share, and use data through tangible and physical visualizations. This paper introduces Physikit, a system designed to allow users to explore and engage with environmental data through physical ambient visualizations. We report on the design and implementation of Physikit, and present a two-week field study which showed that participants got an increased sense of the meaning of data, embellished and appropriated the basic visualizations to make them blend into their homes, and used the visualizations as a probe for community engagement and social behavior.

Houben, S., Golsteijn, C., Gallacher, S., Johnson, R., Bakker, S., Marquardt, N., Capra, L., Rogers, Y. (2016) Physikit: Data Engagement Through Physical Ambient Visualizations in the Home. Proceedings of CHI 2016, ACM.

As light as your footsteps: altering walking sounds to change perceived body weight, emotional state and gait.

UNIVERSITY COLLEGE LONDON, 2015

An ever more sedentary lifestyle is a serious problem in our society. Enhancing people's exercise adherence through technology remains an important research challenge. We propose a novel approach for a system supporting walking that draws from basic findings in neuroscience research. Our shoe-based prototype senses a person's footsteps and alters in real-time the frequency spectra of the sound they produce while walking. The resulting sounds are consistent with those produced by either a lighter or heavier body. Our user study showed that modified walking sounds change one's own perceived body weight and lead to a related gait pat-tern. In particular, augmenting the high frequencies of the sound leads to the perception of having a thinner body and enhances the motivation for physical activity inducing a more dynamic swing and a shorter heel strike. We here dis-cuss the opportunities and the questions our findings open.

Tajadura-Jimenez, A., Basia, M., Deroy, O., Fairhurst, M., Marquardt, N.,

Bianchi-Berthouze, N. (2015)

As light as your footsteps: altering walking sounds to change perceived body weight, emotional state and gait.

In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI '15). ACM, New York, NY, USA, 2943-2952.

WatchConnect:

A Toolkit for Prototyping Smartwatch-Centric Cross-Device Applications.

UNIVERSITY COLLEGE LONDON, 2015

People increasingly use smartwatches in tandem with other devices such as their mobile phones. This allows novel cross- device applications using the watch as both input device and miniature output display. However, despite the increasing availability of smartwatches, prototyping cross-device watch applications remains a challenging task. Developers are often limited in the applications they can explore because most available toolkits provide only limited access to different types of input sensors for cross device interactions. To ad- dress this problem, we introduce WatchConnect, a toolkit for rapidly prototyping cross-device applications and interaction techniques with smartwatches. The toolkit provides develop- ers with (1) an extendable hardware platform that emulates a smartwatch, (2) a user interface framework that integrates with an existing UI builder, and (3) a rich set of input and output events using a range of built-in sensor mapping. We evaluate the toolkit's capabilities with seven interaction tech- niques and applications.

Houben, S., Marquardt, N. (2015)

WatchConnect: A Toolkit for Prototyping Smartwatch-Centric Cross-Device Applications.

In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI '15). ACM, New York, NY, USA, 1247-1256.

Proxemic Flow:

Dynamic Peripheral Floor Visualizations for Revealing and Mediating Large Surface Interactions.

UNIVERSITY COLLEGE LONDON, 2015

Interactive large surfaces have recently become commonplace for interac-tions in public settings. The fact that people can engage with them and the spectrum of possible interactions, however, often remain invisible and can be confusing or ambiguous to passersby. In this paper, we explore the de-sign of dynamic peripheral floor visualizations for revealing and mediating large surface interactions. Extending earlier work on interactive illuminated floors, we introduce a novel approach for leveraging floor displays in a sec-ondary, assisting role to aid users in interacting with the primary display. We illustrate a series of visualizations with the illuminated floor of the Proxemic Flow system. In particular, we contribute a design space for pe-ripheral floor visualizations that (a) provides peripheral information about tracking fidelity with personal halos, (b) makes interaction zones and borders explicit for easy opt-in and opt-out, and (c) gives cues inviting for spatial movement or possible next interaction steps through wave, trail, and footstep animations. We demonstrate our proposed techniques in the con-text of a large surface application and discuss important design considera-tions for assistive floor visualizations.

Vermeulen, J., Luyten, K., Coninx, K., Marquardt, N., and Bird, J. (2015)

Proxemic Flow: Dynamic Peripheral Floor Visualizations for Revealing and Mediating Large Surface Interactions.

In Proceedings of INTERACT (4) 2015, pp. 264-281.

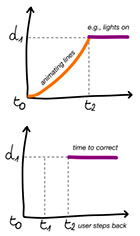

The Design of Slow-Motion Feedback

UNIVERSITY COLLEGE LONDON, 2014

The misalignment between the timeframe of systems and that of their users can cause problems, especially when the system relies on implicit interaction. It makes it hard for users to understand what is happening and leaves them little chance to intervene. This paper introduces the design concept of slow-motion feedback, which can help to address this issue. A definition is provided, together with an overview of existing applications of this technique.

Vermeulen, J., Luyten, K., Coninx, K., Marquardt, N. (2014)

The Design of Slow-Motion Feedback.

In Proceedings of the 2014 ACM Conference on Designing Interactive Systems - DIS '14. ACM, New York, NY, USA, 267-270.

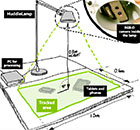

HuddleLamp: Spatially-Aware Mobile Displays for Ad-hoc Around-the-Table Collaboration

UNIVERSITY COLLEGE LONDON, 2014

We present HuddleLamp, a desk lamp with an integrated RGB-D camera that precisely tracks the movements and positions of mobile displays and hands on a table. This enables a new breed of spatially-aware multi-user and multi-device applications for around-the-table collaboration without an interactive tabletop. At any time, users can add or remove displays and reconfigure them in space in an adhoc manner without the need of installing any software or attaching markers. Additionally, hands are tracked to detect interactions above and between displays, enabling fluent cross-device interactions. We contribute a novel hybrid sensing approach that uses RGB and depth data to increase tracking quality and a technical evaluation of its capabilities and limitations. For enabling installation-free ad-hoc collaboration, we also introduce a web-based architecture and JavaScript API for future HuddleLamp applications. Finally, we demonstrate the resulting design space using five examples of cross-device interaction techniques.

R�dle, R., Jetter, H.-C., Marquardt, N., Reiterer, H., Rogers, Y. (2014)

HuddleLamp: Spatially-Aware Mobile Displays for Ad-hoc Around-the-Table Collaboration

In Proceedings of the Ninth ACM International Conference on Interactive Tabletops and Surfaces (ITS '14). ACM, New York, NY, USA, 45-54.

Group Together:

Cross-Device Interaction via Micro-mobility and F-formations

MICROSOFT RESEARCH REDMOND, 2012

GroupTogether is a system that explores cross-device interaction using two sociological constructs. First, F-formations concern the distance and relative body orientation among multiple users, which indicate when and how people position themselves as a group. Second, micromobility describes how people orient and tilt devices towards one another to promote fine-grained sharing during co-present collaboration. We sense these constructs using:(a) a pair of overhead Kinect depth cameras to sense small groups of people, (b) low-power 8GHz band radio modules to establish the identity, presence, and coarse-grained relative locations of devices, and (c) accelerometers to detect tilting of slate devices. The resulting system supports fluid, minimally disruptive techniques for co-located collaboration by leveraging the proxemics of people as well as the proxemics of devices.

Marquardt, N., Hinckley, K. and Greenberg, S. (2012)

Cross-Device Interaction via Micro-mobility and F-formations.

In ACM Symposium on User Interface Software and Technology - UIST 2012. (Cambridge, MA, USA), ACM Press, pages 13-22, October 7-10.

Gradual Engagement between Digital Devices as a Function of Proximity:

From Awareness to Progressive Reveal to Information Transfer

UNIVERSITY OF CALGARY, GROUPLAB

MICROSOFT RESEARCH REDMOND, 2011-2012

The increasing number of digital devices in our environment enriches how we interact with digital content. Yet, cross-device information transfer - which should be a common operation - is surprisingly difficult. One has to know which devices can communicate, what information they contain, and how information can be exchanged. To mitigate this problem, we formulate the gradual engagement design pattern that generalizes prior work in proxemic interactions and informs future system designs. The pattern describes how we can design device interfaces to gradually engage the user by disclosing connectivity and information exchange capabili-ties as a function of inter-device proximity. These capabili-ties flow across three stages: (1) awareness of device pres-ence/connectivity, (2) reveal of exchangeable content, and (3) interaction methods for transferring content between devices tuned to particular distances and device capabili-ties. We illustrate how we can apply this pattern to design, and show how existing and novel interaction techniques for cross-device transfers can be integrated to flow across its various stages. We explore how techniques differ between personal and semi-public devices, and how the pattern sup-ports interaction of multiple users.

Marquardt, N., Ballendat, T., Boring, S., Greenberg, S. and Hinckley, K. (2012)

Gradual Engagement between Digital Devices as a Function of Proximity: From Awareness to Progressive Reveal to Information Transfer.

In Proceedings of Interactive Tabletops and Surfaces - ACM ITS 2012 (Boston, USA), ACM Press, 10 pages, November 11-14.

The Proximity Toolkit:

Prototyping Proxemic Interactions in Ubiquitous Computing Ecologies

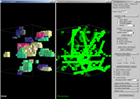

UNIVERSITY OF CALGARY, GROUPLAB, 2010-2011

People naturally understand and use proxemic relationships (e.g., their distance and orientation towards others) in everyday situations. However, only few ubiquitous computing (ubicomp) systems interpret such proxemic relationships to mediate interaction (proxemic interaction). A technical problem is that developers find it challenging and tedious to access proxemic information from sensors. Our Proximity Toolkit solves this problem. It simplifies the exploration of interaction techniques by supplying fine-grained proxemic information between people, portable devices, large interactive surfaces, and other non-digital objects in a room-sized environment. The toolkit offers three key features. 1) It facilitates rapid prototyping of proxemic-aware systems by supplying developers with the orientation, distance, motion, identity, and location information between entities. 2) It includes various tools, such as a visual monitoring tool, that allows developers to visually observe, record and explore proxemic relationships in 3D space. (3) Its flexible architecture separates sensing hardware from the proxemic data model derived from these sensors, which means that a variety of sensing technologies can be substituted or combined to derive proxemic information. We illustrate the versatility of the toolkit with proxemic-aware systems built by students.

Marquardt, N., Diaz-Marino, R., Boring, S. and Greenberg, S. (2011)

The Proximity Toolkit: Prototyping Proxemic Interactions in Ubiquitous Computing Ecologies.

In ACM Symposium on User Interface Software and Technology - UIST 2011. (Santa Barbara, CA, USA), ACM Press, 11 pages, October 16-18. Include video figure, duration 4:19.

The Continuous Interaction Space: Interaction Techniques Unifying Touch and Gesture On and Above a Digital Surface

UNIVERSITY OF CALGARY, GROUPLAB, 2010-2011

The rising popularity of digital table surfaces has spawned considerable interest in new interaction techniques. Most interactions fall into one of two modalities: 1) direct touch and multi-touch (by hand and by tangibles) directly on the surface, and 2) hand gestures above the surface. The limitation is that these two modalities ignore the rich interaction space between them. To move beyond this limitation, we first contribute a unification of these discrete interaction modalities called the continuous interaction space. The idea is that many interaction techniques can be developed that go beyond these two modalities, where they can leverage the space between them. That is, we believe that the underlying system should treat the space on and above the surface as a continuum, where a person can use touch, gestures, and tangibles anywhere in the space and naturally move between them. Our second contribution illustrates this, where we introduce a variety of interaction categories that exploit the space between these modalities. For example, with our Extended Continuous Gestures category, a person can start an interaction with a direct touch and drag, then naturally lift off the surface and continue their drag with a hand gesture over the surface. For each interaction category, we implement an example (or use prior work) that illustrates how that technique can be applied. In summary, our primary contribution is to broaden the design space of interaction techniques for digital surfaces, where we populate the continuous interaction space both with concepts and examples that emerge from considering this space as a continuum.

Marquardt, N., Jota, R., Greenberg, S. and Jorge, J. (2011)

The Continuous Interaction Space: Interaction Techniques Unifying Touch and Gesture On and Above a Digital Surface.

In Proceedings of the 13th IFIP TCI3 Conference on Human Computer Interaction - INTERACT 2011. (Lisbon, Portugal), 16 pages, September 5-9.

Designing User-, Hand-, and Handpart-Aware Tabletop Interactions with the TOUCHID Toolkit

UNIVERSITY OF CALGARY, GROUPLAB, 2011

Recent work in multi-touch tabletop interaction introduced many novel techniques that let people manipulate digital content through touch. Yet most only detect touch blobs. This ignores richer interactions that would be possible if we could identify (1) which hand, (2) which part of the hand, (3) which side of the hand, and (4) which person is actually touching the surface. Fiduciary-tagged gloves were previ-ously introduced as a simple but reliable technique for providing this information. The problem is that its low-level programming model hinders the way developers could rapidly explore new kinds of user- and handpart-aware interactions. We contribute the TOUCHID toolkit to solve this problem. It allows rapid prototyping of expressive multi-touch interactions that exploit the aforementioned characteristics of touch input. TOUCHID provides an easy-to-use event-driven API. It also provides higher-level tools that facilitate development: a glove configurator to rapidly associate particular glove parts to handparts; and a posture configurator and gesture configurator for register-ing new hand postures and gestures for the toolkit to recog-nize. We illustrate TOUCHID's expressiveness by showing how we developed a suite of techniques (which we consider a secondary contribution) that exploits knowledge of which handpart is touching the surface.

Marquardt, N., Kiemer, J., Ledo, D., Boring, S. and Greenberg, S. (2011)

Designing User-, Hand-, and Handpart-Aware Tabletop Interactions with the TOUCHID Toolkit.

ACM International Conference on Interactive Tabletops and Surfaces-ITS 2011. (Kobe, Japan), ACM Press, 10 pages, November 13-16.

The Fat Thumb:

Using the Thumb's Contact Size for Single-Handed Mobile Interaction.

UNIVERSITY OF CALGARY, GROUPLAB, 2011

Modern mobile devices allow a rich set of multi-finger interactions that combine modes into a single fluid act, for example, one finger for panning blending into a two-finger pinch gesture for zooming. Such gestures require the use of both hands: one holding the device while the other is interacting. While on the go, however, only one hand may be available to both hold the device and interact with it. This mostly limits interaction to a single-touch (i.e., the thumb), forcing users to switch between input modes explicitly. In this paper, we contribute the Fat Thumb interaction technique, which uses the thumb's contact size as a form of simulated pressure. This adds a degree of freedom, which can be used, for example, to integrate panning and zooming into a single interaction. Contact size determines the mode (i.e., panning with a small size, zooming with a large one), while thumb movement performs the selected mode. We discuss nuances of the Fat Thumb based on the thumb's limited operational range and motor skills when that hand holds the device. We compared Fat Thumb to three alternative techniques, where people had to pan and zoom to a predefined region on a map. Participants performed fastest with the least strokes using Fat Thumb.

Boring, S., Ledo, D., Chen, X., Marquardt, N., Tang, A., Greenberg, S. (2011)

The Fat Thumb: Using the Thumb's Contact Size for Single-Handed Mobile Interaction.

In Proceedings of the ACM Conference on Mobile HCI - MobileHCI 2012. ACM Press, pages 39-48, September 21-24, 2012.

Proxemic Interaction:

Designing for a Proximity and Orientation-Aware Environment

UNIVERSITY OF CALGARY, GROUPLAB, 2009-2010

In the everyday world, much of what we do is dictated by how we interpret spatial relationships, or proxemics. What is surprising is how little proxemics are used to mediate people's interactions with surrounding digital devices. We imagine proxemic interaction as devices with fine-grained knowledge of nearby people and other devices - their position, identity, movement, and orientation - and how such knowledge can be exploited to design interaction techniques. In particular, we show how proxemics can: regulate implicit and explicit interaction; trigger such interactions by continuous movement or by movement of people and devices in and out of discrete proxemic regions; mediate simultaneous interaction of multiple people; and interpret and exploit people's directed attention to other people and objects. We illustrate these concepts through an interactive media player running on a vertical surface that reacts to the approach, identity, movement and orientation of people and their personal devices.

Ballendat, T., Marquardt, N. and Greenberg, S. (2010)

Proxemic Interaction: Designing for a Proximity and Orientation-Aware Environment.

In Proceedings of the ACM Conference on Interactive Tabletops and Surfaces - ACM ITS 2010. (Saarbruecken, Germany),

ACM Press, 10 pages, November 7-10.

Applying Proxemics to Mediate People�s Interaction with Devices in Ubiquitous Computing Ecologies

UNIVERSITY OF CALGARY, GROUPLAB, 2009-2010

Through vastly increasing availability of digital devices in people's everyday life, ubiquitous computing (ubicomp) ecologies are emerging. An important challenge here is the design of adequate techniques that facilitate people's interaction with these ubicomp devices. In my research, I explore how the knowledge of people's and devices' spatial relationships - called proxemics - can be applied to interaction design. I introduce concepts of proxemic interactions that consider fine-grained information of proxemics to mediate people's interactions with digital devices, such as large digital surfaces or portable personal devices. In particular, my work considers four dimensions that are essential to determine basic proxemic relationships of people and devices: position, orientation, movement, and identity. I outline my previous and current work towards a framework of proxemic interaction, the design of adequate development tools, and the implementation and evaluation of applications that illustrate concepts of proxemic interactions.

Marquardt, N. and Greenberg, S. (2010)

Applying Proxemics to Mediate People�s Interaction with Devices in Ubiquitous Computing Ecologies.

In Doctoral Symposium at ACM Conference on Interactive Tabletops and Surfaces - ITS 2010. (Saarbruecken, Germany), 4 pages, November 7-10.

What Caused that Touch? Expressive Interaction with a Surface through Fiduciary-Tagged Gloves

UNIVERSITY OF CALGARY, GROUPLAB, 2010

The hand has incredible potential as an expressive input device. Yet most touch technologies imprecisely recognize limited hand parts (if at all), usually by inferring the hand part from the touch shapes. We introduce the fiduciary-tagged glove as a reliable, inexpensive, and very expressive way to gather input about: (a) many parts of a hand (fingertips, knuckles, palms, sides, backs of the hand), and (b) to discriminate between one person's or multiple peoples' hands. Examples illustrate the interaction power gained by being able to identify and exploit these various hand parts.

Marquardt, N., Kiemer, J. and Greenberg, S. (2010)

What Caused That Touch? Expressive Interaction with a Surface through Fiduciary-Tagged Gloves.

In Proceedings of the ACM Conference on Interactive Tabletops and Surfaces - ACM ITS 2010. (Saarbruecken, Germany),

ACM Press, 4 pages plus video, November 7-10.

Rethinking RFID:

Awareness and Control

For Interaction With RFID Systems

UNIVERSITY OF CALGARY, GROUPLAB,

MICROSOFT RESEARCH CAMBRIDGE, 2009-2010

People now routinely carry radio frequency identification (RFID) tags – in passports, driver’s licenses, credit cards, and other identifying cards – where nearby RFID readers can access privacy-sensitive information on these tags. The problem is that people are often unaware of security and privacy risks associated with RFID, likely because the technology remains largely invisible and uncontrollable for the individual. To mitigate this problem, we introduce a collection of novel yet simple and inexpensive tag designs. Our tags provide reader awareness, where people get visual, audible, or tactile feedback as tags come into the range of RFID readers. Our tags also provide information control, where people can allow or disallow access to the information stored on the tag by how they touch, orient, move, press, or illuminate the tag.

Marquardt, N., Talor, A., Villar, N. and Greenberg, S. (2010)

Rethinking RFID: Awareness and Control For Interaction With RFID Systems.

In Proceedings of the ACM Conference on Human Factors in Computing Systems - ACM CHI 2010. ACM Press, 10 pages, April.

Marquardt, N., Talor, A., Villar, N. and Greenberg, S. (2010)

Visible and Controllable RFID Tags.

In Video Showcase, DVD Proceedings of the ACM Conference on Human Factors in Computing Systems - ACM CHI 2010. ACM Press, 6 pages, April 10-15. Video and paper, demonstrated live at CHI.

Marquardt, N. and Taylor, A. (2009)

RFID Reader Detector and Tilt-Sensitive RFID Tags.

In DIY for CHI: Methods, Communities, and Values of Reuse and Customization.

(Workshop held at the ACM CHI 2009 Conference, Boston, Mass.),

(Buechley, L., Paulos, E., Rosner, D., Williams, A., Ed.), April 5.

Jain, A., Marquardt, N. and Taylor, A. (2008)

Near-Future RFID.

In Proceedings of Ethnographic Praxis in Industry Conference - EPIC.

American Anthropology Association, pages 332-333. Artifact submission (similar to Demonstration).

Revealing the Invisible:

Visualizing the Location and

Event Flow of Distributed Physical Devices

UNIVERSITY OF CALGARY, GROUPLAB, 2009-2010

Distributed physical user interfaces comprise networked sensors, actuators and other devices attached to a variety of computers in different locations. Developing such systems is no easy task. It is hard to track the location and status of component devices, even harder to understand, validate, test and debug how events are transmitted between devices, and hardest yet to see if the overall system behaves correctly. Our Visual Environment Explorer supports developers of these systems by visualizing the location and status of individual and/or aggregate devices, and the event flow between them. It visualizes the current event flow between devices as they are received and transmitted, as well as the event history. Events are displayable at various levels of detail. The visualization also shows the activity of active applications that use these physical devices. The tool is highly interactive: developers can explore system behavior through spatial navigation, zooming, multiple simultaneous views, event filtering and details-on-demand, and through time-dependent semantic zooming.

Marquardt, N., Gross, T., Carpendale, S. and Greenberg, S. (2010)

Revealing the Invisible: Visualizing the Location and Event Flow of Distributed Physical Devices.

In Proceedings of the Fourth International Conference on Tangible, Embedded and Embodied Interaction - TEI 2010. (Cambridge, MA, USA), ACM Press, 8 pages, January 25-27.

The Haptic Tabletop Puck:

Tactile Feedback for Interactive Tabletops

UNIVERSITY OF CALGARY, GROUPLAB, 2009

In everyday life, our interactions with objects on real tables include how our fingertips feel those objects. In comparison, current digital interactive tables present a uniform touch surface that feels the same, regardless of what it presents visually. In this paper, we explore how tactile interaction can be used with digital tabletop surfaces. We present a simple and inexpensive device – the Haptic Tabletop Puck – that incorporates dynamic, interactive haptics into tabletop interaction. We created several applications that explore tactile feedback in the area of haptic information visualization, haptic graphical interfaces, and computer supported collaboration. In particular, we focus on how a person may interact with the friction, height, texture and malleability of digital objects.

Marquardt, N., Nacenta, M., Young, J.,

Carpendale, S.,

and Greenberg, S. and Sharlin, E. (2009)

The Haptic Tabletop Puck: Tactile Feedback for Interactive Tabletops.

In ITS 2009: Proceedings of ACM International Conference on Interactive Tabletops and Surfaces,

November 23–25, 2009, Banff, Alberta, Canada.

Marquardt, N., Nacenta, M., Young, J.,

Carpendale, S.,

and Greenberg, S. and Sharlin, E. (2009)

The Haptic Tabletop Puck: The Video.

In DVD Proceedings of Interactive Tabletops and Surfaces - ITS 2009. (Banff, Canada), ACM Press, November 23-25.

The Continuous Interaction Space:

Integrating Gestures Above a Surface with Direct Touch

UNIVERSITY OF CALGARY, GROUPLAB, 2009

The advent of touch-sensitive and camera-based digital surfaces has spawned considerable development in two types of hand-based interaction techniques. In particular, people can interact: 1) directly on the surface via direct touch, or 2) above the surface via hand motions. While both types have value on their own, we believe much more potent interactions are achievable by unifying interaction techniques across this space. That is, the underlying system should treat this space as a continuum, where a person can naturally move from gestures over the surface to touches directly on it and back again. We illustrate by example, where we unify actions such as selecting, grabbing, moving, reaching, and lifting across this continuum of space.

Marquardt, N., Jota, R., Greenberg, S. and Jorge, J. (2009)

The Continuous Interaction Space: Integrating Gestures Above a Surface with Direct Touch.

Research report 2009-925-04, Department of Computer Science,

University of Calgary, Calgary, Alberta, Canada, April.

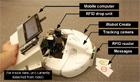

Situated Messages for Asynchronous Human-Robot Interaction

UNIVERSITY OF CALGARY, GROUPLAB, 2008/2009

An ongoing issue in human robot interaction (HRI) is how people and robots communicate with one another. While there is considerable work in real-time human-robot communication, fairly little has been done in asynchronous realm. Our approach, which we call situated messages, lets humans and robots asynchronously exchange information by placing physical tokens – each representing a simple message – in meaningful physical locations of their shared environment. Using knowledge of the robot’s routines, a person can place a message token at a location, where the location is typically relevant to redirecting the robot’s behavior at that location. When the robot passes nearby that location, it detects the message and reacts accordingly. Similarly, robots can themselves place tokens at specific locations for people to read. Thus situated messages leverages embodied interaction, where token placement exploits the everyday practices and routines of both people and robots. We describe our working prototype, introduce application scenarios, explore message categories and usage patterns, and suggest future directions.

Marquardt, N., Young, J., Sharlin, E. and Greenberg, S. (2009)

Situated Messages for Asynchronous Human-Robot Interaction.

In Adjunt Proc. Human Robot Interaction (Late Breaking Abstracts) - HRI 2009. (San Diego, California),

2 pages plus poster, March 11-13.

Prototyping Distributed Physical User Interfaces

BAUHAUS-UNIVERSITY WEIMAR,

UNIVERSITY OF CALGARY, GROUPLAB, 2007/2008

This project continued the research about prototyping distributed physical user interfaces, that started with the Shared Phidgets toolkit. The developed toolkit integrates distributed sensors and actuators, and provides easy to use programming strategies for developers to build their envisioned interactive systems. The runtime platform of the toolkit hides the complexity of hardware integration and network synchronisation. The implemented developer library as well as the introduced programming strategies address developers with diverse development skills. Appliance case studies illustrate the applicability of the toolkit and the provided utilities to support the rapid prototyping process.

Marquardt, N. (2008)

Developer

Toolkit and Utilities for Rapidly Prototyping Distributed Physical User

Interfaces,

Diploma Thesis (MSc), Cooperative Media Lab, Bauhaus-University Weimar, Germany.

Utilities for Controlling, Testing, and Debugging of Distributed Information Appliances

BAUHAUS-UNIVERSITY WEIMAR,

UNIVERSITY OF CALGARY, GROUPLAB, 2007/2008

To support the testing, debugging, and deployment of distributed physical user interfaces, a collection of development utilities allow the monitoring and control of all connected components at runtime. For instance, visualisations can be used to explore the distributed hardware components and the built appliances in their geographical context. These utilities allow gaining insight into the internal communication processes of the distributed infrastructure. Furthermore, the simulation utilities facilitate the testing and debugging of the developed appliances.

Marquardt, N. (2008)

Developer

Toolkit and Utilities for Rapidly Prototyping Distributed Physical User

Interfaces,

Diploma Thesis (MSc), Cooperative Media Lab, Bauhaus-University Weimar, Germany.

Tangible Interfaces and Remote Awareness

MICROSOFT RESEARCH CAMBRIDGE, 2006

The objective of this research project was the prototype development of tangible user interfaces that help to provide awareness between remote collaborators. The research furthermore included a system prototype that could be used as surrogate/proxy of remote located people (by using video and audio streaming and extending this device with various tangible controls, sensors, and actuators).

A second part of the research explored the ways of how digital media (like video, photos) can be made 'tangible', in order that the exploration of this digital content can be more intuitive for people. Various prototypes have been built to illustrate the concepts of 'tangible digital media'.

Shared Phidgets

UNIVERSITY OF CALGARY, GROUPLAB, 2005/2006

The Shared Phidgets toolkit provides a library and tools that enables the

rapid protoyping of physical user interfaces with remote located devices. The

Phidgets can be accessed and controlled over the network, while all the network

specific technology is hidden from the application developer.

.NET components and interface skins facilitate the development, and the included

tools (such as the server, connector, controlling and observing applications)

let the programmer easily overview and control the Phidget components.

The toolkit uses a distributed Model-View-Controller (dMVC) design pattern to

represent every device so that data associated with the model is easily queried

and manipulated. Therefore the programmer can also access the model directly

(implemented as a shared dictionary) and reading and writing entries in the

shared dictionary.

Marquardt, N. and Greenberg, S. (2007)

Shared Phidgets: A Toolkit for Rapidly Prototyping Distributed Physical User

Interfaces.

In TEI 2007: Proceedings of the 1st international conference on

Tangible and embedded interaction (February 15-17, Baton Rouge, Louisiana, USA),

ACM Press, pp. 13-20.

Collaboration Bus

BAUHAUS UNIVERSITY WEIMAR, COOPERATIVE MEDIA LAB, 2004/2005

The CollaborationBus application is a graphical editor that provides abstractions from base technology and thereby allows multifarious users to configure Ubiquitous Computing environments. By composing pipelines users can easily specify the information flows from selected sensors via optional filters for processing the sensor data to actuators changing the system behaviour according to the users' wishes. Users can compose pipelines for both home and work environments. An integrated sharing mechanism allows them to share their own compositions, and to reuse and build upon others' compositions. Real-time visualisations help them understand how the information flows through their pipelines.

Gross, T. and Marquardt, N. (2007)

CollaborationBus: An Editor for the Easy Configuration of Complex Ubiquitous

Computing Environments.

In Proceedings of the Fifteenth Euromicro

Conference on Parallel, Distributed, and Network - Based Processing - PDP 2007

(Feb. 7-9, Naples, Italy). IEEE Computer Society Press, Los Alamitos, CA,

(accepted).

SensBase: Ubiquitous Computing Middleware

BAUHAUS UNIVERSITY WEIMAR, COOPERATIVE MEDIA LAB, 2004/2005

Sens-ation is an open and generic service-oriented platform, which provides powerful, yet easy-to-use, tools to software developers who want to develop context-aware, sensor-based infrastructures. The service-oriented paradigm of Sens-ation enables standardised communication within individual infrastructures, between infrastructures and their sensors, but also among distributed infrastructures. On a whole, Sens-ation facilitates the development allowing developers to concentrate on the semantics of their infrastructures, and to develop innovative concepts and implementations of context-aware systems.

Gross, T., Egla, T. and Marquardt, N.

Sens-ation: A Service-Oriented Platform for Developing Sensor-Based

Infrastructures.

International Journal of Internet Protocol Technology (IJIPT) 1, 3 (2006). pp.

159-167. (ISSN Online: 1743-8217, ISSN Print: 1743-8209).

Swarm Intelligence

BAUHAUS UNIVERSITY WEIMAR, VIRTUAL REALITY LAB, 2004

The basic principle of the swarm intelligence algorithms is to divide complex calculations between multiple, simple executive agents. The computer science algorithms are inspired by observations of real ant swarms, since they solve complex tasks by simple local behaviour and activities. With this swarm simulation implementation, various SI algorithms can be used to cluster data sets and display the virtual swarm environment with a two or three dimensional visualization. The applied algorithms are founded on the research of V. Ramos, J. Handl, M. Dorigo, E. Bonabeau, E. D. Lumer, B. Faieta and some other researchers of the swarm intelligence science community. Furthermore there are various extensions to the original systems, such as dynamic pheromone evaporation, object switching while carrying another object, dynamic picking and dropping probabilities, varying border conditions, changes of the world dimensions in two and three dimensions as well as elegant solutions for some problems concerning orientation and boundary conditions. For the visualization of the environment of the swarms we used two dimensional worlds, as well as varying three dimensional worlds (e.g. cube, dish and tube). We have analyzed the swarms sorting behaviour in the environments, and also evaluated the main swarm features: flexibility, robustness, decentralized organization and self-organization of the swarm. The self-organization of the swarm is based on the feedback that each agent can transmit with the change of the swarm's environment (e.g. picking or dropping) or the deposition of pheromones. More details about our research can be found in the Developer Documentation (only available in German).